REGULATION ON A NOTICE AND ACTION MECHANISM AND CONTENT MODERATION PROCEDURES

WHAT IS THE AIM OF OUR PROPOSAL?

The internet is an empowering tool that allows us to communicate globally, meet each other, build networks, join forces, access information and culture, and express and spread political opinions.

Unfortunately, platforms such as YouTube, Instagram, Twitter, and TikTok filter and moderate with a lot of collateral damage: too often, hateful content, especially targeting minority groups, remains online. On the other hand, legitimate posts, videos, accounts, and ads are removed, and the platforms make it difficult to contest. This has serious implications for freedom of expression online. To make our internet safer and more democratic, we need clear rules on how platforms should handle content and better protect our fundamental rights online. New procedural rules could help ensure that illegal content gets taken down while legal content stays up.

The Greens/EFA’s proposal comes up with the first comprehensive set of rules for online platforms and how they should deal with illegal content and introduces safeguards for content moderation under their terms and conditions. This proposal, which was drafted in a collaborative process with experts and civil society organisations, is what we would like to see in the upcoming EU Digital Services Act.

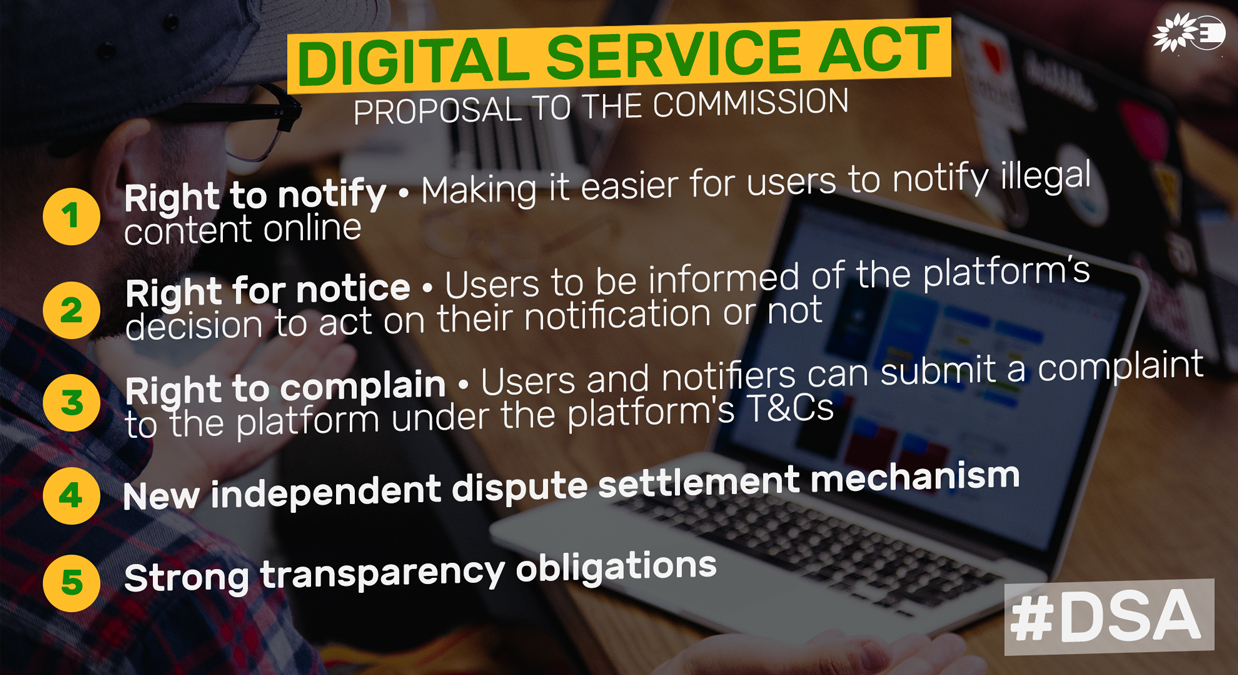

Among the solutions, the proposal suggests introducing a right to notify, a right to receive a counter-notice, specific complaint and redress mechanisms, stronger transparency obligations, and a new independent dispute settlement mechanism for content moderation issues.

SCOPE

The proposal should apply to online platforms providing information society services targeting the EU market. It will be independent of the establishment or registration, or principle place of business of information services; Non-commercial services or services that have fewer than 100.000 monthly active users will be exempt from the proposal.

KEY POINTS

Citizens’ rights

The proposal provides for new rights and gives citizens more control over online content. These include:

- A new right to notify — making it easy for users to notify allegedly illegal content.

- A new right of the notifier and the content provider to be informed — companies will have to inform the content provider if a notice is flagged against them. They will also have to inform the notifier of their decision to act on their notification or not.

- A new right to counter-notice — upon receipt of a notice, the hosting service provider will have to inform the content provider about the possibility to issue a counter-notice, and about how to complain with the hosting service provider, and how to appeal a decision by either party with the competent authority.

- Judges decide whether content is illegal – platforms must not decide what is allowed on the internet. This is what we have judges for.

- A right to independent dispute resolution for removals under terms and conditions – large platforms removing perfectly legal content harms freedom of expression. And at the same time, toxic content can be hurtful and traumatizing, even though it is legal. In these cases, users should be able to go to an independent entity, which should decide on the content.

Rules for businesses

The proposal imposes different obligations such as:

- An obligation to disable illegal content — upon notice from a notifier, the hosting service provider will have to disable access to content that is manifestly illegal. Once the competent authority has decided, the hosting service provider will be required to remove expeditiously illegal content.

- Requirements for terms and conditions — terms and conditions should be fair, accessible, predictable, non-discriminatory, transparent, and in compliance with the Charter of Fundamental Rights.

- Standards rules for notices of content that might violate terms and conditions — hosting service providers will need to establish mechanisms ensuring that notifiers can submit notices that are precise and respect a certain set of rules.

- Transparency obligations — hosting service providers shall provide clear information about their notice and action procedures.

- Imposing a complaint mechanism — this enables the content provider and the notifier to submit a complaint to the hosting service provider.

BACK TO ARTICLE

BACK TO ARTICLE